AI trust signals are quietly deciding which brands show up and which ones get left out.

Whether it’s ChatGPT recommending a meal delivery kit, Perplexity listing the best standing desks, or Claude suggesting software tools, these engines use trust signals to decide what content and products to show first.

But here’s the thing. Most brands don’t know what those signals are. And even fewer are optimizing for them.

So we decided to find out.

We worked directly with ChatGPT, Perplexity and Claude to test dozens of real-world prompts and reverse-engineer how AI trust signals influence the results. What we found was a clear pattern of specific cues that influence what these AI tools recommend, cite, and summarize.

Some were obvious, like user reviews or expert mentions. Others were more surprising, like how structured content or certification badges can make a brand more visible in AI-generated answers.

This guide breaks it all down.

You’ll get a curated list of the 10 most important AI trust signals. Each one is backed by real prompts, screenshots, and insights from four of the most advanced generative search engines today.

If your brand wants to be part of the answer in AI search, this is what you need to know.

Authoritative Lists and Ranked Mentions

When AI tools decide which products or services to recommend, one of the strongest trust signals they rely on is inclusion in expert-curated lists.

These lists are clear, structured, and usually come from trusted editorial sources like Wirecutter, PCMag, or TechRadar. Since they’re easy to understand and packed with authority, AI search engines love them.

🔍 What We Tested

We asked three leading generative engines the same exact question:

What are the best laptops for video editing in 2025?

Here’s what we saw:

- Perplexity delivered a highly structured list with product images, specs, and star ratings. It pulled directly from sources like PCMag, Rtings.com, and Reddit. The results looked almost like a shopping guide, with prices and performance badges included.

- ChatGPT gave a ranked answer with categories like “Best Overall” and “Best Value.” It cited LaptopMag, PCMag, and Tom’s Guide. It also included a comparison table that clearly reflected the layout of the original editorial sources.

- Claude focused more on context. It grouped laptops by user type, like Mac vs. Windows vs. budget, and referenced expert lists in a more conversational way. Instead of just listing items, it explained why certain picks were recommended.

While each tool presented results in its own voice, they all leaned heavily on ranked editorial content as the foundation.

🧠 Why This Matters

When your product or brand is featured in a respected roundup or buying guide, it sends a strong signal to AI tools. These sources are:

- Easy to scan and extract from

- Built on existing authority and reputation

- Often refreshed with up-to-date insights

That makes it more likely you’ll be:

- Named in AI-generated product recommendations

- Shown in list formats or comparisons

- Cited as a trustworthy source in summaries

If you’re not in those lists, it’s a lot harder to earn visibility. Even if your product is a strong fit.

✅ Brand Takeaway

To boost your presence in AI-generated answers:

- Get your product or brand included in respected “Best Of” lists

- Build relationships with reviewers and journalists in your space

- Highlight third-party mentions on your own site for extra visibility

And structure your product info in a way that’s easy to find and understand. The more clearly your mention is presented, the easier it is for AI to pick it up.

🔎 Engine Notes

- Perplexity built its entire answer around expert lists and buyer guides. It pulled directly from sources with strong headlines, rankings, and citations.

- ChatGPT followed the same pattern, grouping options into helpful categories like “Best Value” or “Most Portable.” It mimicked the layout of well-formatted editorial content.

- Claude used a more narrative approach but still leaned on expert lists. It focused on explaining the reasoning behind each pick, often using phrasing straight from the articles it referenced.

Awards and Certifications

Some of the clearest AI trust signals come from certifications and formal awards. These are third-party validations that show a product meets a specific standard, whether it’s about sustainability, safety, or performance.

Think: USDA Organic, MADE SAFE, ISO certifications, or Editor’s Choice awards. These signals are easy to verify, and AI tools use them to make more confident recommendations.

🔍 What We Tested

We asked ChatGPT, Claude, and Perplexity:

What are the best certified organic skincare brands?

All three tools responded by naming specific brands and listing their certifications. We saw recurring references to badges like:

- USDA Organic

- Leaping Bunny (cruelty-free)

- ECOCERT

- MADE SAFE

- Soil Association

Each engine had its own approach:

- Perplexity pulled answers from editorial sources like The Good Trade and HoneyGirlOrganics.com. It highlighted products with visible certification tags and review-backed descriptions.

- ChatGPT delivered a clear list that grouped brands by certification and included tips for avoiding greenwashing. It treated badges as a core part of brand trust.

- Claude offered a categorized breakdown by price tier and included the certifying body next to each brand. It explained why each certification mattered and what it meant for product quality.

🧠 Why This Matters

Certifications build instant trust. They show that your product has been reviewed and approved by a credible outside source.

AI engines recognize this. When they see known certifications attached to your product (especially across multiple sources), they are more likely to recommend you.

This is especially important in categories like:

- Skincare and cosmetics

- Food and supplements

- Health and wellness

- Sustainability-focused products

The same logic applies to awards. If your brand has received recognitions like “Best Of” or “Editor’s Choice,” that’s a signal of quality and authority that AI tools notice.

✅ Brand Takeaway

If your product or service has any kind of certification or award, make sure it’s:

- Clearly displayed on your website and product pages

- Marked up with structured data if possible

- Repeated in press mentions, reviews, and marketplaces

Don’t assume AI tools will dig to find this info. The more visible and consistent your trust signal is, the more likely it will be picked up and cited in generative answers.

🔎 Engine Notes

- Perplexity favored sources that featured product details alongside badges or certifications. It pulled heavily from list-style articles and product descriptions with verified labels.

- ChatGPT organized its list by certification and included practical tips for consumers. It clearly weighted third-party validation as a major trust factor.

- Claude emphasized transparency, formulation quality, and recognizable certification bodies. It treated certifications as central to its reasoning, not just supporting evidence.

User Reviews and Ratings

When you’re shopping for something online, you probably check the reviews. So do AI tools.

User reviews and ratings are some of the most powerful AI trust signals. They help AI understand what real people think about a product, what works, what doesn’t, and what keeps coming up again and again.

🔍 What We Tested

We asked ChatGPT, Claude, and Perplexity:

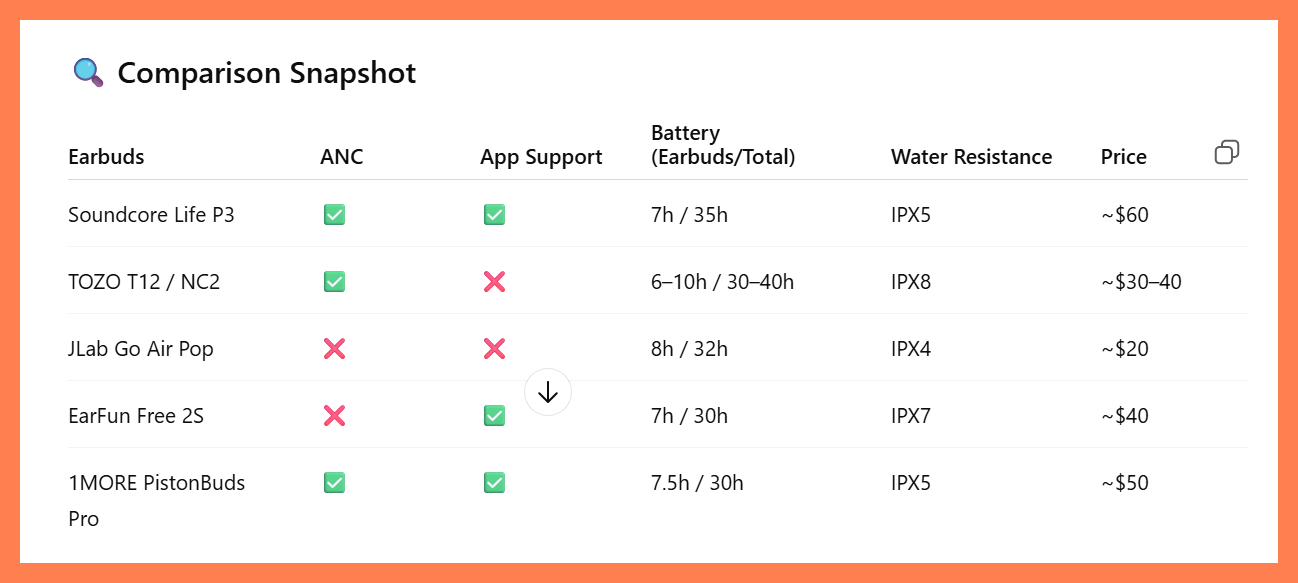

What are the best budget-friendly wireless earbuds?

All three tools returned product lists shaped by user sentiment. They didn’t just list specs. They featured products with:

- High star ratings

- Lots of reviews

- Repeated praise for comfort, battery life, sound quality, and price

Here’s how each engine handled reviews:

- Perplexity showed products with visible star ratings and highlighted phrases like “praised for comfort” and “eco-friendly design.” It pulled from Best Buy, Walmart, and review-backed articles.

- ChatGPT listed earbuds with tens of thousands of positive reviews and mentioned what users liked best. It even paraphrased real comments, like “better than Bose for the price.”

- Claude leaned on expert roundups that summarized user experiences. Its answers echoed the kinds of insights found in actual customer feedback.

🧠 Why This Matters

Reviews help create trust. When thousands of people agree a product is great (or not), AI tools treat that as meaningful data.

But it’s not just about five-star scores. What matters most is:

- How many people have reviewed it and how recently

- What they say in the review (specific pros and cons)

- Where those reviews appear (Amazon, Best Buy, Reddit, etc.)

AI tools look for clear patterns. If positive reviews show up consistently, across trusted platforms, the product is more likely to get recommended.

✅ Brand Takeaway

If you want your products to show up in AI search:

- Ask customers for reviews regularly

- Encourage them to mention specific features or outcomes

- Showcase your best reviews on your site

- Use structured data to help engines find and read them

And stay active. A product with 500 recent reviews will often outperform one with 5,000 old ones.

🔎 Engine Notes

- Perplexity favored products with strong reviews and visibility on retail sites. It pulled in both star ratings and phrases that reflect common buyer feedback.

- ChatGPT highlighted what users were saying on Amazon and in product summaries. It used that information to rank and explain its picks.

- Claude used expert reviews to surface sentiment, focusing on what real users value in budget products. It prioritized usefulness and reliability over technical specs alone.

Structured Product Information

AI tools love structure. When brands present their offerings in a clear, consistent format (thnk feature tables, comparison charts, or standardized product specs), it becomes dramatically easier for generative engines to pull that data into useful summaries and recommendations.

This trust signal is more than hype. It’s clarity.

🔍 What We Tested

We asked the engines:

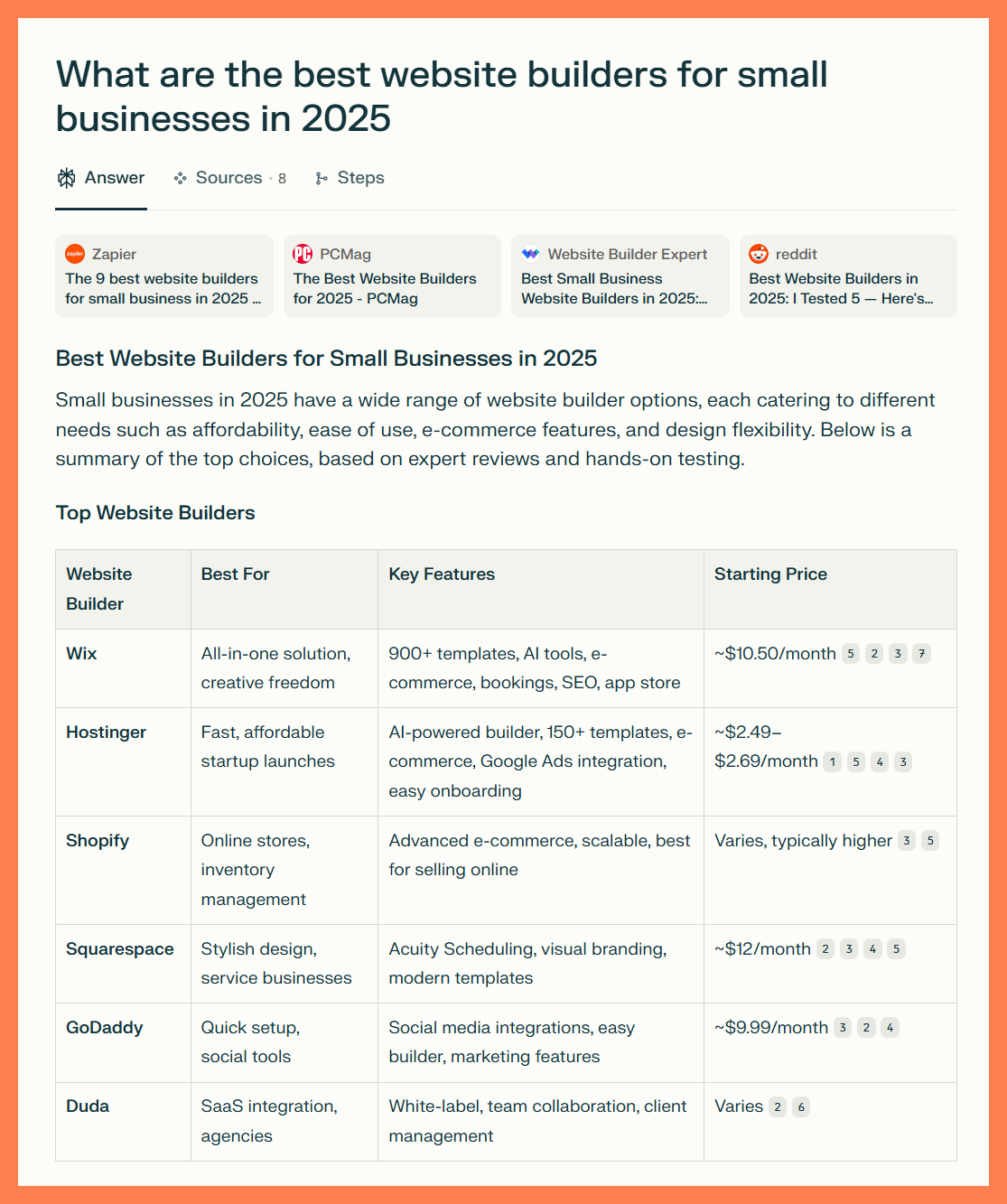

What are the best website builders for small businesses in 2025?

The results made something very clear. When companies like Wix, Shopify, and Hostinger organize their product features in a structured way, they show up more often and more clearly in AI-generated answers.

- Perplexity included a full comparison table listing providers, features, and pricing ranges. This wasn’t scraped at random. It came directly from sources that had structured summaries embedded in them, like feature tables and side-by-side reviews.

- ChatGPT didn’t just list website builders. It categorized them by use case (for example, best for ecommerce or best for visual brands) and summarized core features using bullet points and product spec highlights. These often mirrored how the brands present information on their own sites or in editorial reviews.

- Claude emphasized key features like drag and drop builders, SEO tools, and AI assistants. These were consistent across top recommendations, which suggests it pulled from structured, repeatable product data.

🧠 Why This Matters

Structured information acts as one of the most consistent AI trust signals. It’s machine-readable, which makes it easier for AI engines to:

- Compare products on specific criteria

- Summarize strengths or use cases in plain language

- Match solutions to user intent more precisely

Even when two products are equally strong, the one with clearer structure is more likely to be selected. That’s because it’s easier for the model to understand and explain.

✅ Brand Takeaway

Make your offerings AI-legible. That means:

- Use tables, grids, and bullet points to present features, benefits, and pricing

- Include consistent product specs (like RAM, battery life, integrations, and use case tags)

- Avoid burying key features in long paragraphs or vague copy

- Mirror the formatting used by trusted comparison sites

This isn’t just good user experience. It’s smart AI visibility strategy. If you want to see how your own product pages stack up, check out our guide on how to optimize for Google AI Mode. It breaks down what structured content looks like in real generative results.

🔎 Engine Notes

- Perplexity responded strongest to editorial reviews that used side-by-side comparison charts. These structured formats shaped the entire layout of its answer.

- ChatGPT favored clarity and categorization. Brands that clearly explained who their product is for and what features are included (such as “Best for small business ecommerce”) were featured more prominently.

- Claude emphasized capabilities like AI-powered builders, mobile responsiveness, and SEO optimization. This suggests it drew from structured product pages and comparison content more than subjective reviews.

Primary Source Trustworthiness

When AI engines recommend advice-heavy content, especially in sensitive or regulated categories like taxes, healthcare, or finance, they are extra careful about who is giving that advice. Verified expertise matters more than ever.

🔍 What We Tested

We asked:

What are the best tax filing tips for freelancers in 2025?

Across all three engines, we looked at how much weight was given to expert-backed advice, known authors, or clearly sourced professional content.

Perplexity:

- Cited major tax guidance providers like TurboTax, Everlance, and Boxelder Consulting right up top, complete with links to articles written by CPAs or financial editors.

- Repeatedly emphasized certification references such as “certified tax professionals,” “IRS rules,” and “tax experts recommend.”

- Pulled structured tips with attributions, giving extra weight to well-organized checklists authored by trusted sites.

ChatGPT:

- Referenced tools like QuickBooks, FreshBooks, TurboTax, and H&R Block, linking practical advice to tools created by financial experts.

- Gave a standout tip to consult a CPA if income exceeded $80K, showing a trigger threshold where expert input becomes necessary.

- Emphasized “Pro Tips” throughout, often derived from expert consensus, not crowdsourced forums or blogs.

Claude:

- Delivered the most explicit expert emphasis, stating directly that freelancers should work with a tax professional who understands self-employment tax issues.

- Named quarterly deadlines, IRS forms, and deduction categories with formal precision, echoing the tone of official IRS documentation and professional tax prep guides.

- Clearly leaned on primary sources and first-party tax advisory brands.

🧠 Why This Matters

AI tools are increasingly attuned to source quality, especially when there is risk involved in taking bad advice. They reward content that checks at least one of these boxes:

- Written by credentialed experts such as CPA, MD, or PhD

- Published on platforms known for trust and accuracy like TurboTax, NerdWallet, or Mayo Clinic

- Cited by multiple other trusted sources

- Clearly states the author’s name and credentials

✅ Brand Takeaway

If you are in a category where trust is paramount:

- Add expert bylines with credentials such as CPA, MD, or PhD

- Use schema markup for the author and reviewedBy properties, and specify the Person type when possible

- Clearly signal affiliations with reputable institutions

- Where possible, have real professionals write or at least fact-check your advice

Even when you are not in a regulated niche, showing expertise clearly and visibly makes it easier for AI to trust and recommend your content.

🔎 Engine Notes

- Perplexity prioritized sources with strong expert identity and structured takeaways.

- Claude leaned heavily on professional context, stating that human advisors should be involved in key decisions.

- ChatGPT blended practical tips with name-brand tool references, but was more likely to soft credit expert sources instead of explicitly naming authors.

Detailed First Person Reviews and Testimonials

When you’re buying something big, like a Peloton Bike+, you want to hear from people who’ve actually used it. AI tools feel the same way.

First person reviews and long form testimonials help generative engines understand what ownership really looks like. They’re packed with signals about satisfaction, usability, value over time, and even emotional impact.

🔍 What We Tested

We asked:

What do users say about the Peloton Bike+ after one year?

Our goal was to see if engines could detect long term product value and pull in meaningful, real-world experiences. We weren’t just looking for star ratings. We wanted to see stories.

Here’s how each engine handled it:

- Perplexity highlighted consistent themes from review sites, including performance, motivation, and durability. But it leaned more on summaries than direct user voices.

- ChatGPT stood out with vivid, personal quotes from forums and YouTube. It mentioned real metrics like “1,300 rides” and even embedded a video review.

- Claude split its answer into “positive long term experiences” and “common complaints.” It sounded like a user advisory report, drawing clear lines between value and wear and tear concerns.

🧠 Why This Matters

Engines don’t just care what people say. They care how consistently they say it. First person reviews reveal:

- Specific usage data (“used daily for over a year”)

- Emotional resonance (“life changing,” “worth every penny”)

- Real life drawbacks (“tight shoes,” “dated screen”)

This content feels honest, and that honesty makes it trustworthy.

✅ Brand Takeaway

To help AI engines recognize long term value:

- Encourage customers to share detailed reviews after months of use

- Collect video testimonials or Reddit style write ups with timestamps

- Highlight before and after experiences or lifestyle changes

Don’t just ask “Did you like the product?” Ask “How has this changed your routine over time?”

🔎 Engine Notes

- Perplexity surfaced consistent themes but leaned on editorial style summaries rather than direct quotes.

- ChatGPT pulled in emotional language, direct stories, and even YouTube content, showing strong pattern detection for long term use.

- Claude approached it analytically, organizing experiences into benefits and drawbacks. It focused on durability, customer support, and usage habits.

Visual and Structural Signals

When AI engines pull together product recommendations or comparisons, they don’t just rely on what you say. They also look closely at how you say it. Well-structured visuals and clean formatting act as subtle trust signals that help engines understand, extract, and elevate your content.

Think comparison charts, labeled images, and “best for” grids. These visual formats make it easier for AI to map features to use cases and answer questions clearly.

🔍 What We Tested

We asked the engines:

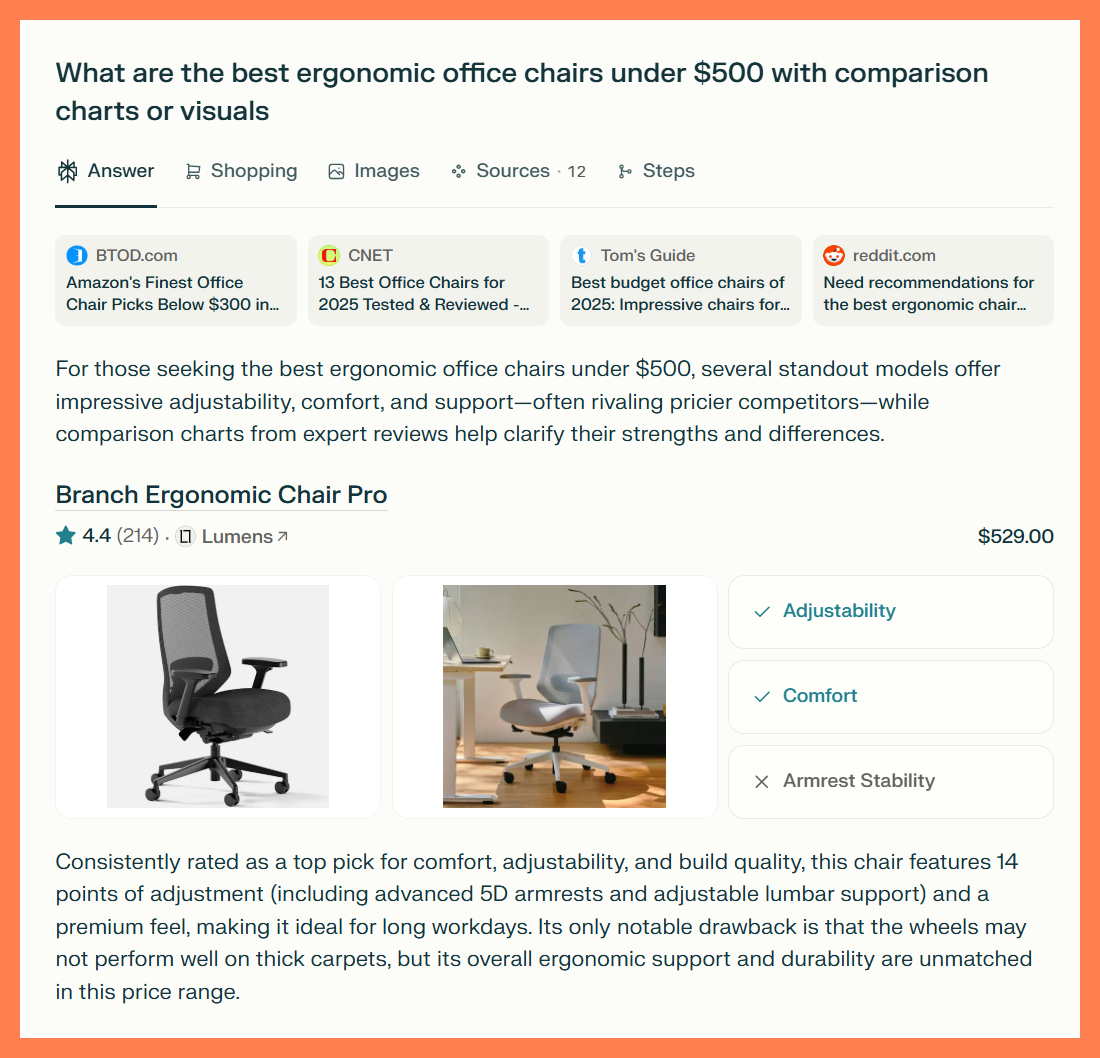

What are the best ergonomic office chairs under $500 with comparison charts or visuals?

Here’s how each one responded:

- Perplexity delivered a rich answer with product images, feature callouts, and structured summaries for each chair. It pulled content from sources like Tom’s Guide and Reddit, but heavily favored those that used tables, checklists, and structured reviews.

- ChatGPT generated a side-by-side comparison table, complete with price ranges, key features, and ideal use cases. It even embedded a YouTube review at the end for additional visual validation.

- Claude emphasized expert rankings and analysis, but prioritized sources that made direct comparisons easier to understand. It highlighted layout and formatting as part of how it evaluated product clarity.

Across the board, engines elevated answers that were not just well written, but visually clear and easy to digest.

🧠 Why This Matters

AI engines are built to scan and summarize information fast. Visual and structural cues make that easier. This helps engines:

- Pull product specs and features with greater accuracy

- Recognize comparisons across multiple options

- Understand user fit, such as “best for tall users” or “ideal for long hours”

Even if the content is solid, if it’s buried in walls of text, it’s less likely to surface in a generative result. Clean structure gives your content a better shot at visibility.

✅ Brand Takeaway

If you want your products or content to be understood and recommended by AI tools:

- Use comparison tables and feature grids when possible

- Include images with clear labels, especially on review or product pages

- Organize content with bullet points, headings, and visual hierarchy

- Mimic the structure of trusted review sites for maximum clarity

You’re not just formatting for humans. You’re formatting for the next generation of machine readers.

🔎 Engine Notes

- Perplexity gave the most weight to clean layouts with labeled visuals and feature summaries. It often pulled structured answers straight from articles that used charts or tables.

- ChatGPT generated a full table on its own, showing that it values structured comparisons and can amplify them when present.

- Claude respected formatting as part of how it assessed clarity and credibility. It favored sources that were both well informed and well organized.

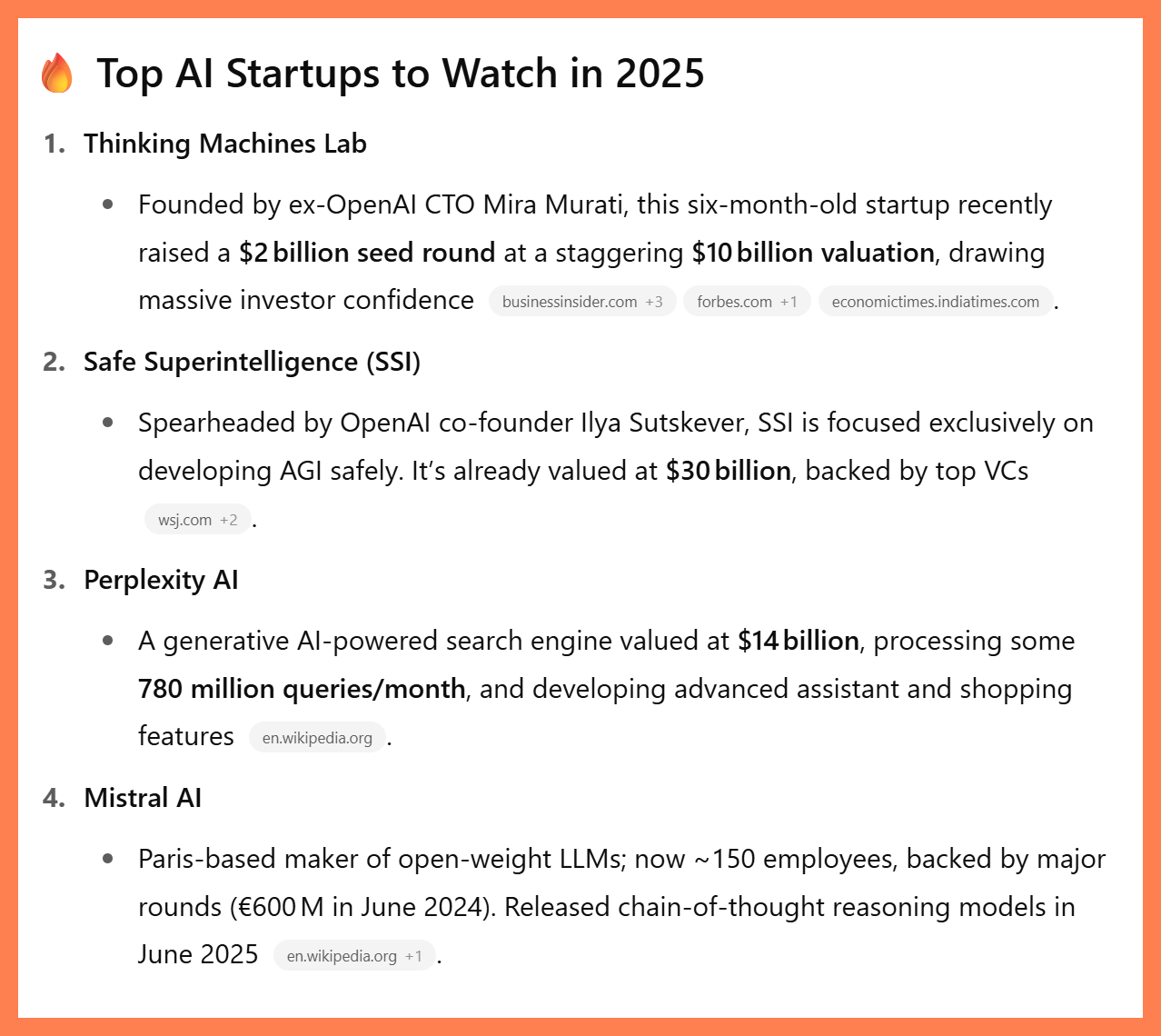

Reputable Mentions in Curated Lists

If your brand makes a “Top AI Startups to Watch” list, engines notice. These curated roundups from outlets like Forbes, TechCrunch, or CB Insights act as third-party trust signals. They suggest your brand is credible, innovative, and backed by momentum.

🔍 What We Tested

We asked the engines:

What are the most reputable AI startups to watch in 2025?

Across all three tools, we looked for what counted as reputable and what sources they leaned on to decide.

Here’s what we saw:

- ChatGPT prioritized startups with massive funding, famous founders, or ties to OpenAI. It called out high-profile investors and valuations, treating them as reputation signals.

- Perplexity surfaced companies featured in Forbes, TechCrunch, and MIT Sloan lists. It included funding data, product focus, and endorsements from VCs, suggesting it pulls heavily from curated editorial roundups.

- Claude leaned on structured categories from CB Insights, organizing companies by function like agents, infrastructure, or vertical apps. It highlighted funding leaders and trends, not just names.

🧠 Why This Matters

Reputation in generative engines is not just about brand awareness. It is about where and how your brand shows up in respected roundups. These signals do a few key things:

- Help engines infer industry leadership

- Act as shortcuts for credibility, especially in fast-moving sectors

- Highlight innovation through proxy indicators like funding, team, or media coverage

And because engines are trained on public data, third-party lists amplify brand presence far beyond your own site.

✅ Brand Takeaway

If you want your brand to show up in AI-generated rankings or industry questions:

- Pitch your startup to trusted roundups like Forbes AI 50, TechCrunch Disrupt, CB Insights, or analyst briefings

- Highlight funding news, founder expertise, and technical achievements in a structured PR-ready format

- Use schema markup for the sameAs, founder, and award properties on your site where applicable

- Be visible in investor-facing channels like Crunchbase, AngelList, and Sifted

Engines treat trusted editorial mentions as a form of earned authority. The more you show up in respected lists, the more likely you are to be surfaced in answers.

🔎 Engine Notes

- Perplexity echoed curated lists verbatim, favoring startups with strong editorial presence and investment visibility

- ChatGPT drew from hype cycles, favoring billion-dollar valuations, OpenAI connections, and media-friendly milestones

- Claude offered the most structured approach, organizing startups by sector and signaling trends like multi-agent systems, privacy tech, or vertical AI

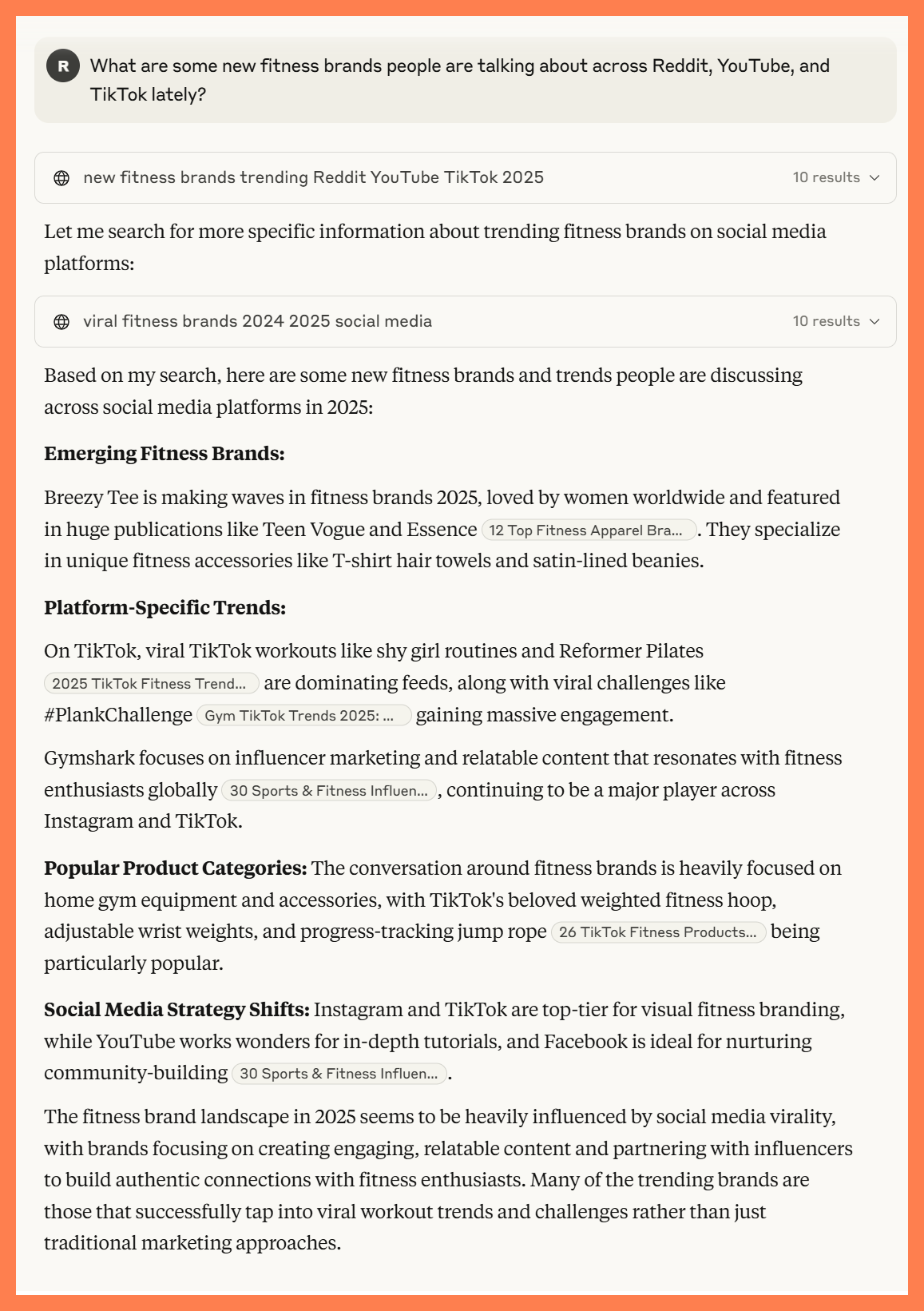

Social Momentum and Virality

Some brands don’t need to win awards or get five-star reviews to show up in generative search. Sometimes, being the brand everyone is talking about is enough.

Social momentum has become a powerful AI trust signal. When users across Reddit, TikTok, YouTube, and other social platforms mention a product over and over, especially in a positive or trend-forward way, AI engines start to notice. And they use that signal to guide recommendations.

🔍 What We Tested

We asked ChatGPT, Claude, and Perplexity:

What are some new fitness brands people are talking about across Reddit, YouTube, and TikTok lately?

The results weren’t just a list of big-name companies. They reflected where the conversation was happening and which brands were riding that wave.

- Perplexity returned a curated editorial-style roundup of trending fitness brands, citing multiple sources and platforms. It emphasized brands like Gymshark, Vuori, and Satisfy Running for their growing cross-platform presence and highlighted newer names like Miller Running and Soar.

- ChatGPT took a storytelling approach. It pulled in real user quotes from Reddit, highlighted try-on videos from YouTube, and noted viral product buzz on TikTok. It added commentary on why each brand was gaining traction, like Halara’s success with data-driven fashion or Amp’s innovation in fitness tech.

- Claude broke the answers into trend types and platform behavior. It talked about how TikTok drives virality, YouTube drives deep engagement, and Reddit builds community-driven trust. It also called out shifts in strategy, like brands focusing more on authenticity, short-form content, and influencer credibility.

🧠 Why This Matters

Generative engines are built to reflect user intent. And when that intent overlaps with real cultural momentum, social signals help tip the scales.

It’s not just about influencer reach or going viral once. AI engines pick up on patterns across platforms, like:

- Recurring brand mentions in forums or video reviews

- Content that gets lots of engagement or shares

- Momentum that spans different communities (not just one viral moment)

This makes social buzz a kind of real-time AI trust signal. It shows the product has traction, not just from experts, but from people who use it, love it, and talk about it.

✅ Brand Takeaway

You don’t need to dominate every social channel. But to benefit from this trust signal, you should:

- Cultivate community engagement, especially on TikTok, Reddit, and YouTube

- Encourage real users to post content and reviews

- Use influencer partnerships strategically to amplify real signals, not just ads

- Track and respond to emerging conversations around your brand

Remember, it’s more than simply being seen. You want others to talk about your brand in ways AI can notice and learn from.

🔎 Engine Notes

- Perplexity leaned on aggregate cross-platform validation. It treated social buzz like an editorial data point, favoring brands that showed up in multiple sources and threads.

- ChatGPT gave detailed, human-toned summaries. It echoed actual reviews and forum chatter, surfacing smaller or emerging brands that had compelling stories or cult followings.

- Claude emphasized the shape of social momentum. It focused on where buzz was happening and how that affected trust, showing that platform context plays a role in AI evaluations.

Local AI Trust Signals

When someone searches for a nearby business or service, AI engines shift their trust radar toward location-based signals. This isn’t just a matter of overall popularity. It’s driven by local relevance, community sentiment, and authentic voices.

🔍 What We Tested

We asked ChatGPT, Claude, and Perplexity:

Where do locals in Austin go for the best Thai food?

This kind of query relies heavily on geographic trust, and the engines delivered strong local insights:

- Perplexity leaned on regional voices and guides like Eater Austin, Yelp neighborhood threads, and Austin‑specific Reddit subcommunities. It surfaced places such as Mai Thai, Super Thai Cuisine, and Dee Dee food truck cited across multiple platforms.

- ChatGPT curated recommendations with human‑style language, including quotes like “If Dee Dee is open, that’s where I go.” It grouped picks as “Highly rated by locals” versus “Sit‑down favorites” and included TikTok and YouTube clips when relevant.

- Claude offered deeper local nuance. Rather than repeating recommendations, it flagged details such as wait time, menu style (spicy, vegetarian), and vibe (late night, casual). This reflects Claude’s emphasis on neighborhood context during its trust assessment.

🧠 Why This Matters

Local AI trust signals help generative search tools answer location‑specific queries with authority. These include:

- Mentions in locally focused guides, blogs, and publications

- Discussions in forums like Reddit or neighborhood Facebook groups

- Descriptive user language referencing “locals” or “community favorites”

- Repeated appearances across platforms tied to a geographic area

- Contextual elements such as operating hours, regional style, or crowd patterns

These AI trust signals matter for any business with a physical presence, from restaurants and salons to plumbers and retail stores. Local signals show AI that real people in that place love what you offer.

✅ Brand Takeaway

To build local visibility in AI search:

- Secure mentions in reputable local publications or “best of” city guides

- Encourage nearby customers to review your business with locational context (e.g., “best pad Thai in East Austin”)

- Participate in local forums or neighborhood communities to increase visibility

- Use local schema markup like LocalBusiness, GeoCoordinates, or Address

- Make your real‑world signals easy for AI to read and connect across sources

🔎 Engine Notes

- Perplexity prioritized local sources and community conversations, signaling that it views geographic specificity as a high-trust cue.

- ChatGPT highlighted quotes and narratives that reflected community opinion, showing it values authentic, local sentiment.

- Claude provided a structured interpretation of local experience, analyzing not just the names of businesses but how and why they matter to the community.

🔎 How Each Engine Prioritizes AI Trust Signals (At a Glance)

After testing how ChatGPT, Perplexity, and Claude respond to dozens of prompts across ten trust signal categories, clear patterns started to emerge.

Each engine has its own way of interpreting and weighting these signals. Some focus more on structure and authority, while others emphasize context and user sentiment.

To make those patterns easier to see and act on, here’s a side-by-side snapshot of how each engine responds to the most important trust cues in generative search:

| Trust Signal | ChatGPT | Perplexity | Claude |

|---|---|---|---|

| Authoritative Lists | ✅✅✅ — Uses ranked editorial sources like LaptopMag and PCMag | ✅✅✅ — Relies on structured buying guides and list formats | ✅✅ — Leans on lists, but reframes them conversationally |

| Certifications & Awards | ✅✅✅ — Groups products by certification; uses as sorting logic | ✅✅ — Surfaces badges via trusted sources | ✅✅✅ — Treats certifications as core reasoning |

| User Reviews & Ratings | ✅✅ — Echoes Amazon/Reddit sentiment, quotes users | ✅✅✅ — Highlights reviews and ratings in structured layouts | ✅✅ — Pulls from expert summaries of user feedback |

| Structured Product Info | ✅✅✅ — Categorizes by use case, extracts spec blocks | ✅✅✅ — Favors comparison charts and tables | ✅✅ — Highlights consistent product features |

| Expert or Primary Sources | ✅✅ — Blends tools and experts, but soft attribution | ✅✅✅ — Names authors and credentials directly | ✅✅✅ — Most explicit about expert identity and authority |

| First-Person Reviews | ✅✅✅ — Pulls emotional language, forums, YouTube quotes | ✅✅ — Summarizes sentiment but less quote-rich | ✅✅ — Analyzes experience over time with clear breakdowns |

| Visual & Structural Signals | ✅✅✅ — Generates tables, embeds videos, echoes structure | ✅✅✅ — Favors clean layout, callouts, visuals | ✅✅ — Considers formatting as part of clarity |

| Reputable List Mentions | ✅✅ — Favors big names and funding headlines | ✅✅✅ — Echoes editorial “Top” lists with full context | ✅✅✅ — Organizes by vertical, emphasizes industry presence |

| Social Momentum | ✅✅✅ — Quotes from Reddit, TikTok buzz, viral stories | ✅✅ — Aggregates cross-platform mentions | ✅✅✅ — Tracks platform-specific dynamics and tone |

| Local Signals | ✅✅ — Leans on user tone (“locals love it”), includes quotes | ✅✅✅ — Highlights Reddit, Eater, neighborhood sources | ✅✅✅ — Adds qualitative context (vibe, wait time, crowd) |

AI Trust Signals = the New Rules of Visibility

Generative engines don’t think like traditional search. They don’t simply index pages and match keywords. They prioritize content that reflects strong AI trust signals, including patterns of credibility, structure, and repetition.

That shift changes the game for brand visibility.

The ten AI trust signals we’ve covered (from expert lists to structured data, from social momentum to local credibility) aren’t just SEO tweaks. They’re visibility fundamentals for the AI age. If your brand wants to show up when people ask tools like ChatGPT or Perplexity what they should buy, try, or trust, you need to start thinking like the engine.

So ask yourself:

- Are we showing up across credible third-party sources?

- Is our content structured in ways machines can easily read?

- Do our trust signals appear consistently, across platforms and formats?

- Are we helping real people say real things about us that AI can find and believe?

Because in the world of generative search, you don’t get ranked. You get referenced. And every reference starts with trust.

Want to turn these trust signals into top placement?

Check out our latest research on how to be the first brand mentioned in AI search. We ran 35 real prompts through leading engines to uncover what actually influences that top brand slot.

What are AI trust signals?

AI trust signals are the patterns, structures, and sources that generative search engines rely on to determine which brands to include in their answers. These can include things like ranked lists, consistent third-party mentions, expert-backed content, and schema markup.

Why do AI trust signals matter for brand visibility?

AI tools like ChatGPT, Claude, and Perplexity summarize answers from across the web. If your content doesn’t show the right signals of authority and clarity, it’s unlikely to be included, meaning your brand stays invisible in the results users actually see.

How do AI trust signals differ from traditional SEO signals?

While traditional SEO focused on backlinks and keywords, AI trust signals prioritize clarity, usefulness, and recognizable credibility. The goal isn't just to rank; it's to be quotable and trustworthy in the eyes of an LLM.

Can small or newer brands still earn AI trust?

Yes, but they need to be strategic. Clear brand-forward language, appearances in third-party content, and participation in discussions like Reddit threads or expert roundups can all help signal legitimacy even without huge domain authority.

What kind of content performs well for AI visibility?

Content that is concise, well-structured, and written like a helpful answer tends to perform best. AI tools favor full sentences, clear takeaways, and sources that mimic how people naturally ask and answer questions.

Do AI tools evaluate trust signals the same way?

Not exactly. Perplexity may lean more on source links and real-time citations, while ChatGPT focuses more on well-structured content from its training data. Claude tends to favor expert-backed and ethical sources. Understanding the nuance helps you tailor your strategy.

How can I check if my content gives off strong trust signals?

Use tools like Trularity’s AI Visibility Grader or simply ask an AI tool a relevant question and see if your brand appears. If not, it’s a sign to revisit your structure, sources, and phrasing.